Difference between revisions of "Source Analysis 3D Imaging"

| (96 intermediate revisions by 6 users not shown) | |||

| Line 1: | Line 1: | ||

| − | == 3D Imaging == | + | {{BESAInfobox |

| + | |title = Module information | ||

| + | |module = BESA Research Standard or higher | ||

| + | |version = BESA Research 6.1 or higher | ||

| + | }} | ||

| + | |||

| + | <!-- == 3D Imaging == --> | ||

| + | == Overview == | ||

BESA Research features a set of new functions that provide 3D images that are displayed superimposed to the individual subject's anatomy. This chapter introduces these different images and describe their properties and applications. | BESA Research features a set of new functions that provide 3D images that are displayed superimposed to the individual subject's anatomy. This chapter introduces these different images and describe their properties and applications. | ||

| Line 6: | Line 13: | ||

| − | '''Volume images:''' | + | <span style="color:#3366ff;">'''Volume images:'''</span> |

| − | + | ||

| − | + | ||

| − | + | ||

| + | * '''The Multiple Source Beamformer (MSBF)''' is a tool for imaging brain activity. It is applied in the time-domain or time-frequency domain. The beamformer technique in time-frequency domain can image not only evoked, but also induced activity, which is not visible in time-domain averages of the data. | ||

| + | * '''Dynamic Imaging of Coherent Sources (DICS)''' can find coherence between any two pairs of voxels in the brain or between an external source and brain voxels. DICS requires time-frequency-transformed data and can find coherence for evoked and induced activity. | ||

The following imaging methods provide an image of brain activity based on a distributed multiple source model: | The following imaging methods provide an image of brain activity based on a distributed multiple source model: | ||

| Line 19: | Line 25: | ||

* '''swLORETA '''is equivalent to sLORETA, except for an additional depth weighting. | * '''swLORETA '''is equivalent to sLORETA, except for an additional depth weighting. | ||

* '''SSLOFO '''is an iterative application of standardized minimum norm images with consecutive shrinkage of the source space. | * '''SSLOFO '''is an iterative application of standardized minimum norm images with consecutive shrinkage of the source space. | ||

| − | * ''' | + | * A '''User-defined volume image''' allows to experiment with the different imaging techniques. It is possible to specify user-defined parameters for the family of distributed source images to create a new imaging technique. |

| + | * Bayesian source imaging: '''SESAME''' uses a semi-automated Bayesian approach to estimate the number of dipoles along with their parameters. | ||

| + | <span style="color:#3366ff;">'''Surface image:'''</span> | ||

| − | |||

* The '''Surface Minimum Norm Image'''. If no individual MRI is available, the minimum norm image is displayed on a standard brain surface and computed for standard source locations. If available, an individual brain surface is used to construct the distributed source model and to image the brain activity. | * The '''Surface Minimum Norm Image'''. If no individual MRI is available, the minimum norm image is displayed on a standard brain surface and computed for standard source locations. If available, an individual brain surface is used to construct the distributed source model and to image the brain activity. | ||

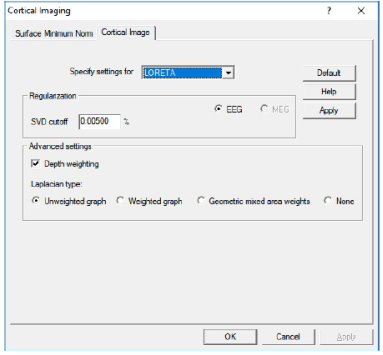

* '''Cortical LORETA'''. Unlike classical LORETA, cortical LORETA is not computed in a 3D volume, but on the cortical surface. | * '''Cortical LORETA'''. Unlike classical LORETA, cortical LORETA is not computed in a 3D volume, but on the cortical surface. | ||

| Line 29: | Line 36: | ||

| − | + | <span style="color:#3366ff;">'''Discrete model probing:'''</span> | |

| − | '''Discrete model probing:''' | + | |

These images do not visualize source activity. Rather, they visualize properties of the currently applied discrete source model: | These images do not visualize source activity. Rather, they visualize properties of the currently applied discrete source model: | ||

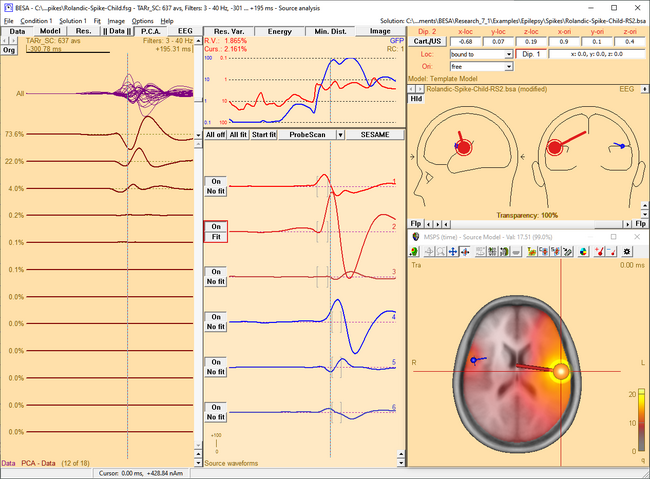

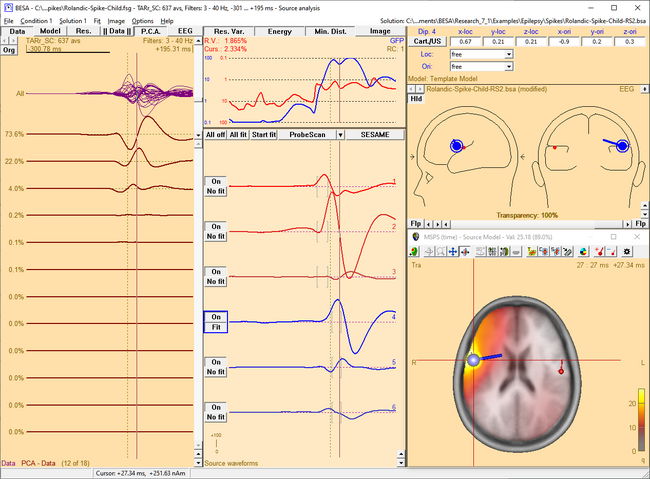

* The '''Multiple Source Probe Scan (MSPS)''' is a tool for the validation of a discrete multiple source model. | * The '''Multiple Source Probe Scan (MSPS)''' is a tool for the validation of a discrete multiple source model. | ||

| − | * The''' Source Sensitivity image''' displays the sensitivity of a selected source in the current discrete source model and is therefore data independent. | + | * The '''Source Sensitivity image''' displays the sensitivity of a selected source in the current discrete source model and is therefore data independent. |

| − | + | ||

| − | + | ||

| − | + | == Multiple Source Beamformer (MSBF) in the Time-frequency Domain == | |

| + | <br> | ||

'''Short mathematical introduction''' | '''Short mathematical introduction''' | ||

| − | The BESA beamformer is a modified version of the linearly constrained minimum variance vector beamformer in the time-frequency domain as described in Gross et al., "Dynamic imaging of coherent sources: Studying neural interactions in the human brain", PNAS 98, | + | The BESA beamformer is a modified version of the linearly constrained minimum variance vector beamformer in the time-frequency domain as described in [https://dx.doi.org/10.1073/pnas.98.2.694 Gross et al., "Dynamic imaging of coherent sources: Studying neural interactions in the human brain", PNAS 98, 694-699, 2001]. It allows to image evoked and induced oscillatory activity in a user-defined time-frequency range, where time is taken relative to a triggered event. |

The computation is based on a transformation of each channel's single trial data from the time domain into the time-frequency domain. This transformation is performed by the BESA Research Source Coherence module and leads to the complex spectral density S<sub>i</sub> (f,t), where i is the channel index and f and t denote frequency and time, respectively. Complex cross spectral density matrices C are computed for each trial: | The computation is based on a transformation of each channel's single trial data from the time domain into the time-frequency domain. This transformation is performed by the BESA Research Source Coherence module and leads to the complex spectral density S<sub>i</sub> (f,t), where i is the channel index and f and t denote frequency and time, respectively. Complex cross spectral density matrices C are computed for each trial: | ||

| − | [[Image:SA 3Dimaging (1).gif]] | + | <math>\mathrm{C}_{ij}\left( f,t \right) = \mathrm{S}_{i}\left( f,t \right) \cdot \mathrm{S}_{j}^{*}\left( f,t \right)</math> |

| + | <!-- [[Image:SA 3Dimaging (1).gif]] --> | ||

| Line 53: | Line 59: | ||

| − | [[Image:SA 3Dimaging (2).gif]] | + | <math>\mathrm{P}\left( r \right) = \operatorname{tr^{'}}\left\lbrack \mathrm{L}^{T}\left( r \right) \cdot \mathrm{C}_{r}^{-1} \cdot \mathrm{L}\left( r \right) \right\rbrack^{-1}</math> |

| + | <!-- [[Image:SA 3Dimaging (2).gif]] --> | ||

| − | Here, C<sub>r</sub><sup>-1</sup> is the inverse of the SVD-regularized average of C<sub>ij</sub>(f,t) over trials and the time-frequency range of interest; L is the leadfield matrix of the model containing a regional source at target location r and, optionally, additional sources whose interference with the target source is to be minimized; tr'[] is the trace of the [ | + | Here, C<sub>r</sub><sup>-1</sup> is the inverse of the SVD-regularized average of C<sub>ij</sub>(f,t) over trials and the time-frequency range of interest; L is the leadfield matrix of the model containing a regional source at target location r and, optionally, additional sources whose interference with the target source is to be minimized; tr'[] is the trace of the [3×3] (MEG:[2×2]) submatrix of the bracketed expression that corresponds to the source at target location r. |

| − | In BESA Research, the output power P(r) is normalized with the output power in a reference time-frequency interval P<sub>ref</sub>(r). A value q ist defined as follows: | + | In BESA Research, the output power P(r) is normalized with the output power in a reference time-frequency interval P<sub>ref</sub>(r). A value q ist defined as follows: |

| + | <math> \mathrm{q}\left( r \right) = | ||

| + | \begin{cases} | ||

| + | \sqrt{\frac{\mathrm{P}\left( r \right)}{\mathrm{P}_{\text{ref}}(r)}} - 1 = \sqrt{\frac{\operatorname{tr^{'}}\left\lbrack \mathrm{L}^{T}\left( r \right) \cdot \mathrm{C}_{r}^{- 1} \cdot \mathrm{L}\left( r \right) \right\rbrack^{- 1}}{\operatorname{tr^{'}}\left\lbrack \mathrm{L}^{T}\left( r \right) \cdot \mathrm{C}_{\text{ref},r}^{- 1} \cdot \mathrm{L}\left( r \right) \right\rbrack^{- 1}}} - 1, & \text{for }\mathrm{P}(r) \geq \mathrm{P}_{\text{ref}}(r) \\ | ||

| − | [[Image:SA 3Dimaging (3).gif]] | + | 1 - \sqrt{\frac{\mathrm{P}_{\text{ref}}\left( r \right)}{\mathrm{P}\left( r \right)}} = 1 - \sqrt{\frac{\operatorname{tr^{'}}\left\lbrack \mathrm{L}^{T}\left( r \right) \cdot \mathrm{C}_{\text{ref},r}^{- 1} \cdot \mathrm{L}\left( r \right) \right\rbrack^{- 1}}{\operatorname{tr^{'}}\left\lbrack \mathrm{L}^{T}\left( r \right) \cdot \mathrm{C}_{r}^{- 1} \cdot \mathrm{L}\left( r \right) \right\rbrack^{- 1}}}, & \text{for }\mathrm{P}(r) < \mathrm{P}_{\text{ref}}(r) |

| − | + | \end{cases} </math> | |

| + | <!-- [[Image:SA 3Dimaging (3).gif]] --> | ||

P<sub>ref </sub>can be computed either from the corresponding frequency range in the baseline of the same condition (i.e. the beamformer images event-related power increase or decrease) or from the corresponding time-frequency range in a control condition (i.e. the beamformer images differences between two conditions). The beamformer image is constructed from values q(r) computed for all locations on a grid specified in the '''General Settings tab'''. For MEG data, the innermost grid points within a sphere of approx. 12% of the head diameter are assigned interpolated rather than calculated values). | P<sub>ref </sub>can be computed either from the corresponding frequency range in the baseline of the same condition (i.e. the beamformer images event-related power increase or decrease) or from the corresponding time-frequency range in a control condition (i.e. the beamformer images differences between two conditions). The beamformer image is constructed from values q(r) computed for all locations on a grid specified in the '''General Settings tab'''. For MEG data, the innermost grid points within a sphere of approx. 12% of the head diameter are assigned interpolated rather than calculated values). | ||

| + | q-values are shown in %, where where q[%] = q*100. Alternatively to the definition above, q can also be displayed in units of dB: | ||

| − | q- | + | <math>\mathrm{q}\left\lbrack \text{dB} \right\rbrack = 10 \cdot \log_{10}\frac{\mathrm{P}\left( r \right)}{\mathrm{P}_{\text{ref}}\left( r \right)}</math> |

| + | <!-- [[Image:SA 3Dimaging (4).gif]] --> | ||

| − | + | A beamformer operator is designed to pass signals from the brain region of interest r without attenuation, while minimizing interference from activity in all other brain regions. Traditional single-source beamformers are known to mislocalize sources if several brain regions have highly correlated activity. Therefore, the BESA beamformer extends the traditional single-source beamformer in order to implicitly suppress activity from possibly correlated brain regions. This is achieved by using a multiple source beamformer calculation that contains not only the leadfields of the source at the location of interest r, but also those of possibly interfering sources. As a default, BESA Research uses a bilateral beamformer, where specifically contributions from the homologue source in the opposite hemisphere are taken into account (the matrix L thus being of dimension N×6 for EEG and N×4 for MEG, respectively, where N is the number of sensors). This allows for imaging of highly correlated bilateral activity in the two hemispheres that commonly occurs during processing of external stimuli. | |

| − | + | ||

| − | + | ||

| − | A beamformer operator is designed to pass signals from the brain region of interest r without attenuation, while minimizing interference from activity in all other brain regions. Traditional single-source beamformers are known to mislocalize sources if several brain regions have highly correlated activity. Therefore, the BESA beamformer extends the traditional single-source beamformer in order to implicitly suppress activity from possibly correlated brain regions. This is achieved by using a multiple source beamformer calculation that contains not only the leadfields of the source at the location of interest r, but also those of possibly interfering sources. As a default, BESA Research uses a bilateral beamformer, where specifically contributions from the homologue source in the opposite hemisphere are taken into account (the matrix L thus being of dimension | + | |

In addition, the beamformer computation can take into account possibly correlated sources at arbitrary locations that are specified in the current solution. This is achieved by adding their leadfield vectors to the matrix L in the equation above. | In addition, the beamformer computation can take into account possibly correlated sources at arbitrary locations that are specified in the current solution. This is achieved by adding their leadfield vectors to the matrix L in the equation above. | ||

| Line 81: | Line 91: | ||

'''Applying the Beamformer''' | '''Applying the Beamformer''' | ||

| − | + | This chapter illustrates the usage of the BESA beamformer. The displayed figures are generated using the file <span style="color:#ff9c00;">''''Examples/Learn-by-Simulations/AC-Coherence/AC-Osc20.foc''''</span> (see BESA Tutorial 12: "''Time-frequency analysis, Connectivity analysis, and Beamforming''"). | |

| − | This chapter illustrates the usage of the BESA beamformer. The displayed figures are generated using the file <span style="color:#ff9c00;">''''Examples/Learn-by-Simulations/AC-Coherence/AC-Osc20.foc''''</span> (see BESA Tutorial | + | |

'''Starting the beamformer from the time-frequency window''' | '''Starting the beamformer from the time-frequency window''' | ||

| − | + | The BESA beamformer is applied in the time-frequency domain and therefore requires the Source Coherence module to be enabled. The time-frequency beamformer is especially useful to image in- or decrease of induced oscillatory activity. Induced activity cannot be observed in the averaged data, but shows up as enhanced averaged power in the TSE (Temporal-Spectral Evolution) plot. For instructions on how to initiate a beamformer computation in the time-frequency window, please refer to Chapter '''[[Source_Coherence_How_to...#How_to_Start_the_Beamformer_from_the_Time-Frequency_Window|How to Create Beamformer Images]]'''. | |

| − | The BESA beamformer is applied in the time-frequency domain and therefore requires the Source Coherence module to be enabled. The time-frequency beamformer is especially useful to image in- or decrease of induced oscillatory activity. Induced activity cannot be observed in the averaged data, but shows up as enhanced averaged power in the TSE (Temporal-Spectral Evolution) plot. For instructions on how to initiate a beamformer computation in the time-frequency window, please refer to Chapter | + | |

| − | + | ||

| − | ''to Create Beamformer Images | + | |

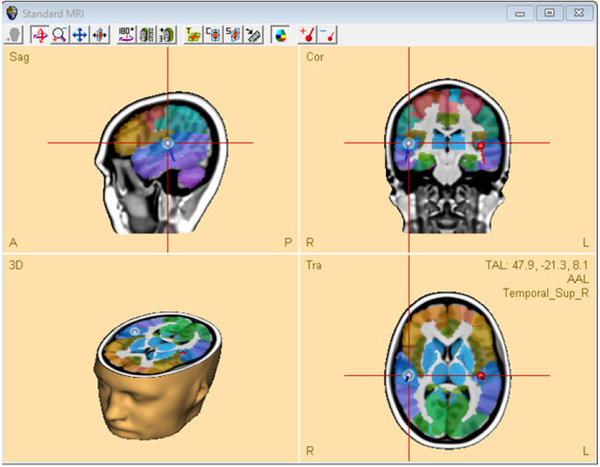

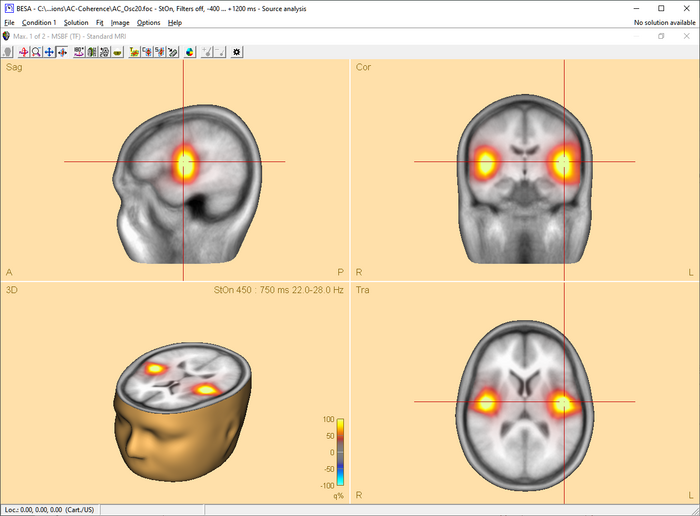

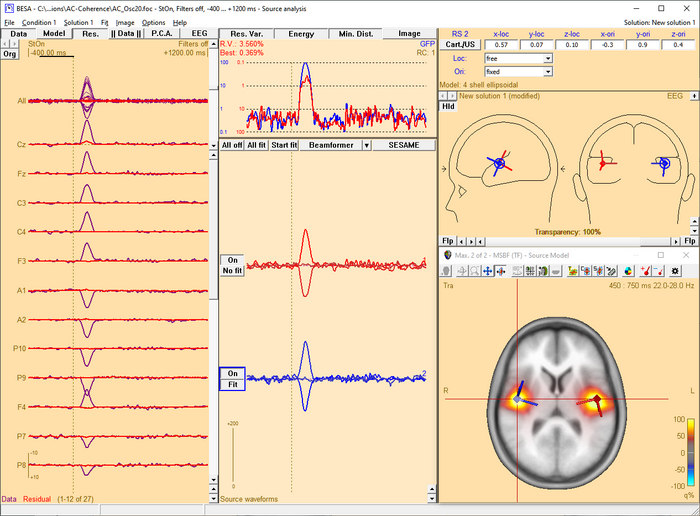

After the beamformer computation has been initiated in the time-frequency window, the source analysis window opens with an enlarged 3D image of the q-value computed with a '''bilateral beamformer'''. The result is superimposed onto the MR image assigned to the data set (individual or standard). | After the beamformer computation has been initiated in the time-frequency window, the source analysis window opens with an enlarged 3D image of the q-value computed with a '''bilateral beamformer'''. The result is superimposed onto the MR image assigned to the data set (individual or standard). | ||

| − | [[Image:SA 3Dimaging (5). | + | [[Image:SA 3Dimaging (5).png|700px|thumb|c|none|Beamformer image after starting the computation in the Time-Frequency window. A bilateral pair of sources in the auditory cortex accounts for the highly correlated oscillatory induced activity. Only the bilateral beamformer manages to separate these activities; a traditional single-source beamformer would merge the two sources into one image maximum in the head center instead.]] |

| − | + | ||

| − | + | ||

| − | + | ||

'''Multiple source beamformer in the Source Analysis window''' | '''Multiple source beamformer in the Source Analysis window''' | ||

| − | |||

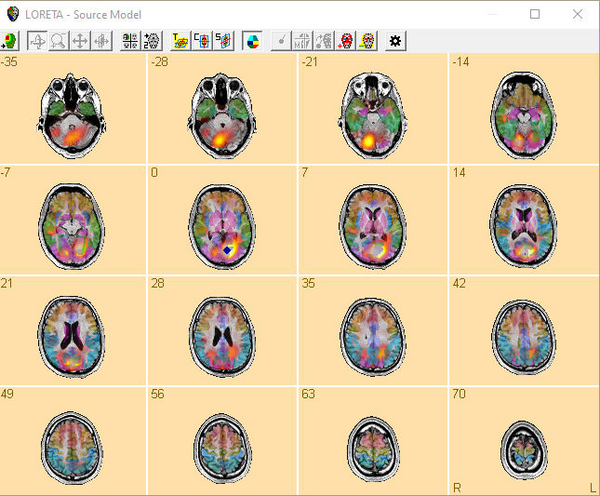

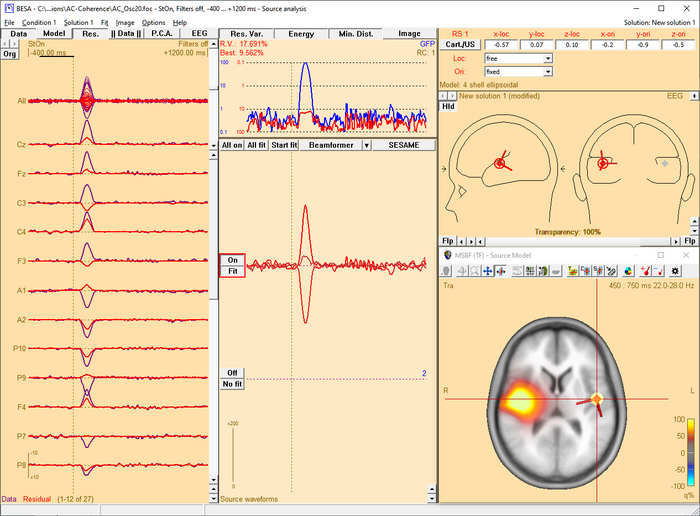

The 3D imaging display is part of the source analysis window. If you press the <span style="color:#3366ff;">'''Restore'''</span> button at the right end of the title bar of the 3D window, the window appears at the bottom right of the source analysis window. In the channel box, the averaged (evoked) data of the selected condition is shown. When a control condition was selected, its average is appended to the average of the target condition. | The 3D imaging display is part of the source analysis window. If you press the <span style="color:#3366ff;">'''Restore'''</span> button at the right end of the title bar of the 3D window, the window appears at the bottom right of the source analysis window. In the channel box, the averaged (evoked) data of the selected condition is shown. When a control condition was selected, its average is appended to the average of the target condition. | ||

| − | [[Image:SA 3Dimaging (6). | + | [[Image:SA 3Dimaging (6).png|700px|thumb|c|none|Source Analysis window with beamformer image. The two sources have been added using the ''<span style="color:#3366ff;">'''Switch to'''</span>'' ''<span style="color:#3366ff;">'''Maximum'''</span>'' and ''<span style="color:#3366ff;">'''Add Source '''</span>''toolbar buttons (see below). Source waveforms are computed from the displayed averaged data. Therefore, they do not represent the activity displayed in the beamformer image, which in this simulation example is induced (i.e. not phase-locked to the trigger)!]] |

| − | + | When starting the beamformer from the time-frequency window, a bilateral beamformer scan is performed. In the source analysis window, the beamformer computation can be repeated taking into account possibly correlated sources that are specified in the current solution. Interfering activities generated by all sources in the current solution that are in the 'On' state are specifically suppressed ('''they enter the matrix L in the beamformer calculation''', see Chapter ''Short mathematical description'' above). The computation can be started from the <span style="color:#3366ff;">'''Image'''</span> menu or from the <span style="color:#3366ff;">'''Image selector button'''</span> dropdown menu. The <span style="color:#3366ff;">'''Image'''</span> menu can be evoked either from the menu bar or by right-clicking anywhere in the source analysis window. | |

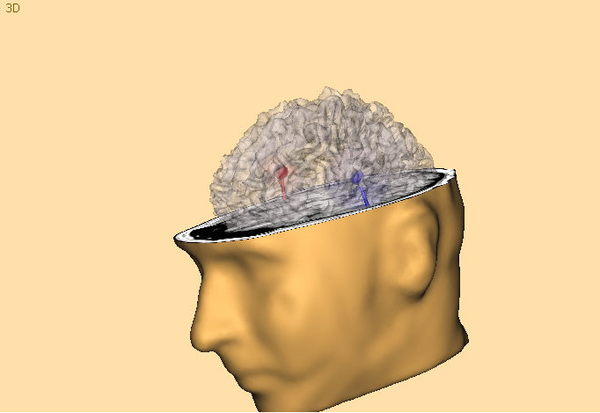

| − | + | [[Image:SA 3Dimaging (7).png|700px|thumb|c|none|Multiple source beamformer image calculated in the presence of a source in the left hemisphere. A '''single''' source scan has been performed. The source set in the current solution accounts for the left-hemispheric q-maximum in the data. Accordingly, the beamformer scan reveals only the as yet unmodeled additional activity in the right hemisphere (note the radiological convention in the 3D image display).]] | |

| − | + | The beamformer scan can be performed with a '''single''' or a '''bilateral''' source scan. The default scan type depends on the current solution: | |

| − | + | * When the beamformer is started from the Time-Frequency window, the Source Analysis window opens with a new solution and a '''bilateral''' beamformer scan is performed. | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | The beamformer scan can be performed with a single or a bilateral source scan. The default scan type depends on the current solution: | + | |

| − | * When the beamformer is started from the Time-Frequency window, the Source Analysis window opens with a new solution and a bilateral beamformer scan is performed. | + | |

* When the beamformer is started within the Source Analysis window, the default is | * When the beamformer is started within the Source Analysis window, the default is | ||

| − | + | ** a scan with a '''single''' source in addition to the sources in the current solution, if at least one source is active. | |

| − | * a scan with a single source in addition to the sources in the current solution, if at least one source is active. | + | ** a '''bilateral''' scan if no source in the current solution is active. |

| − | * a bilateral scan if no source in the current solution is active. | + | |

| − | The default scan type is the multiple source beamformer. The non-default scan type can be enforced using the corresponding ''Volume Image / Beamformer'' entry in the <span style="color:#3366ff;"> | + | The default scan type is the multiple source beamformer. The non-default scan type can be enforced using the corresponding ''Volume Image / Beamformer'' entry in the '''<span style="color:#3366ff;">Image</span>''' menu. |

'''Inserting Sources out of the Beamformer Image''' | '''Inserting Sources out of the Beamformer Image''' | ||

| + | The beamformer image can be used to add sources to the current solution. A simple double-click anywhere in the 2D- or 3D-view will generate a non-oriented regional source at the corresponding location. However, a better and easier way to create sources at image maxima and minima is to use the toolbar buttons <span style="color:#3366ff;">'''Switch to Maximum'''</span> [[Image:SA 3Dimaging (8).gif]] and <span style="color:#3366ff;">'''Add Source'''</span> [[Image:SA 3Dimaging (9).gif]]. | ||

| − | + | Use the <span style="color:#3366ff;">'''Switch to Maximum'''</span> button to place the red crosshair of the 3D window onto a local image maximum or minimum. Hitting the <span style="color:#3366ff;">'''Add Source'''</span> button creates a regional source at the location of the crosshair and therefore ensures the exact placement of the source at the image extremum. Moreover, the <span style="color:#3366ff;">'''Add Source'''</span> button generates an oriented regional source. BESA Research automatically estimates the source orientation that contributes most to the power in the target time-frequency interval (or the reference time-frequency interval, if its power is larger than that in the target interval). The accuracy of this orientation estimate depends largely on the noise content of the data. The smaller the signal-to-noise ratio of the data, the lower is the accuracy of the orientation estimate. '''This feature allows to use the beamformer as a tool to create a source montage for source coherence analysis, where it is of advantage to work with oriented sources'''. | |

| − | + | ||

| − | Use the <span style="color:#3366ff;">'''Switch to Maximum'''</span> button to place the red crosshair of the 3D window onto a local image maximum or minimum. Hitting the <span style="color:#3366ff;">'''Add Source'''</span> button creates a regional source at the location of the crosshair and therefore ensures the exact placement of the source at the image extremum. Moreover, the <span style="color:#3366ff;">'''Add Source'''</span> button generates an oriented regional source. BESA Research automatically estimates the source orientation that contributes most to the power in the target time-frequency interval (or the reference time-frequency interval, if its power is larger than that in the target interval). The accuracy of this orientation estimate depends largely on the noise content of the data. The smaller the signal-to-noise ratio of the data, the lower is the accuracy of the orientation estimate. This feature allows to use the beamformer as a tool to create a source montage for source coherence analysis, where it is of advantage to work with oriented sources. | + | |

| Line 146: | Line 139: | ||

* The current image can be exported to ASCII or BrainVoyager vmp-format from the<span style="color:#3366ff;">''' Image'''</span> menu. | * The current image can be exported to ASCII or BrainVoyager vmp-format from the<span style="color:#3366ff;">''' Image'''</span> menu. | ||

* For scaling options, use the [[Image:SA 3Dimaging (10).gif]] and [[Image:SA 3Dimaging (11).gif]] <span style="color:#3366ff;">'''Image Scale toolbar'''</span> buttons. | * For scaling options, use the [[Image:SA 3Dimaging (10).gif]] and [[Image:SA 3Dimaging (11).gif]] <span style="color:#3366ff;">'''Image Scale toolbar'''</span> buttons. | ||

| − | * Parameters used for the beamformer calculations can be set in the ''' | + | * Parameters used for the beamformer calculations can be set in the '''Standard Volumes''' of the ''Image Settings dialog box.'' |

| − | + | ||

| − | + | == Dynamic Imaging of Coherent Sources (DICS) == | |

| + | <br> | ||

'''Short mathematical introduction''' | '''Short mathematical introduction''' | ||

| Line 156: | Line 149: | ||

Dynamic Imaging of Coherent Sources (DICS) is a sophisticated method for imaging cortico-cortical coherence in the brain, or coherence between an external reference (e.g. EMG channel) and cortical structures. DICS can be applied to localize evoked as well as induced coherent cortical activity in a user-defined time-frequency range. | Dynamic Imaging of Coherent Sources (DICS) is a sophisticated method for imaging cortico-cortical coherence in the brain, or coherence between an external reference (e.g. EMG channel) and cortical structures. DICS can be applied to localize evoked as well as induced coherent cortical activity in a user-defined time-frequency range. | ||

| − | DICS was implemented in BESA closely following Gross et al., "Dynamic imaging of coherent sources: Studying neural interactions in the human brain", PNAS 98, | + | DICS was implemented in BESA closely following [https://dx.doi.org/10.1073/pnas.98.2.694 Gross et al., "Dynamic imaging of coherent sources: Studying neural interactions in the human brain", PNAS 98, 694-699, 2001]. |

The computation is based on a transformation of each channel's single trial data from the time domain into the frequency domain. This transformation is performed by the BESA Research Coherence module and results in the complex spectral density matrix that is used for constructing the spatial filter similar to beamforming. | The computation is based on a transformation of each channel's single trial data from the time domain into the frequency domain. This transformation is performed by the BESA Research Coherence module and results in the complex spectral density matrix that is used for constructing the spatial filter similar to beamforming. | ||

DICS computation yields a 3-D image, each voxel being assigned a coherence value. Coherence values can be described as a neural activity index and do not have a unit. The neural activity index contrasts coherence in a target time-frequency bin with coherence of the same time-frequency bin in a baseline. | DICS computation yields a 3-D image, each voxel being assigned a coherence value. Coherence values can be described as a neural activity index and do not have a unit. The neural activity index contrasts coherence in a target time-frequency bin with coherence of the same time-frequency bin in a baseline. | ||

| + | |||

'''DICS for cortico-cortical coherence is computed as follows:''' | '''DICS for cortico-cortical coherence is computed as follows:''' | ||

| Line 168: | Line 162: | ||

| − | [[Image:SA 3Dimaging (12).gif]] | + | <math>W\left( r \right) = \left\lbrack L^{T}\left( r \right) \cdot C^{- 1} \cdot L\left( r \right) \right\rbrack^{- 1} \cdot L^{T}(r) \cdot C^{- 1}</math> |

| + | <!-- [[Image:SA 3Dimaging (12).gif]] --> | ||

| + | |||

The cross-spectrum between two locations (voxels) r<sub>1</sub> and r<sub>2</sub> in the head are calculated with the following equation: | The cross-spectrum between two locations (voxels) r<sub>1</sub> and r<sub>2</sub> in the head are calculated with the following equation: | ||

| − | [[Image:SA 3Dimaging (13).gif]] | + | <math>C_{s}\left( r_{1},r_{2} \right) = W\left( r_{1} \right) \cdot C \cdot W^{*T}\left( r_{2} \right),</math> |

| + | <!-- [[Image:SA 3Dimaging (13).gif]] --> | ||

| Line 179: | Line 176: | ||

| − | [[Image:SA 3Dimaging (14).gif]] | + | <math>c_{s}\left( r_{1},r_{2} \right) = \lambda_{1}\left\{ C_{s}\left( r_{1},r_{2} \right) \right\},</math> |

| + | <!-- [[Image:SA 3Dimaging (14).gif]] --> | ||

| + | |||

where λ<sub>1</sub>{} indicates the largest singular value of the cross spectrum. Once the cross spectral density is estimated, the connectivity¹(CON) between the two brain regions r<sub>1</sub> and r<sub>2</sub> are calculated as follows: | where λ<sub>1</sub>{} indicates the largest singular value of the cross spectrum. Once the cross spectral density is estimated, the connectivity¹(CON) between the two brain regions r<sub>1</sub> and r<sub>2</sub> are calculated as follows: | ||

| − | [[Image:SA 3Dimaging (15).gif]] | + | <math>\text{CON}\left( r_{1},r_{2} \right) = \frac{c_{s}^{\text{sig}}\left( r_{1},r_{2} \right) - c_{s}^{\text{bl}}(r_{1},r_{2})}{c_{s}^{\text{sig}}\left( r_{1},r_{2} \right) + c_{s}^{\text{bl}}(r_{1},r_{2})},</math> |

| + | <!-- [[Image:SA 3Dimaging (15).gif]] --> | ||

| + | |||

where c<sub>s</sub><sup>sig</sup> is the cross-spectral density for the signal of interest between the two brain regions r<sub>1</sub> and r<sub>2</sub>, and c<sub>s</sub><sup>bl</sup> is the corresponding cross spectral density for the baseline or the control condition, respectively. In the case DICS is computed with a cortical reference, r<sub>1</sub> is the reference region (voxel) and remains constant while r<sub>2</sub> scans all the grid points within the brain sequentially. In that way, the connectivity between the reference brain region and all other brain regions is estimated. The value of CON(r<sub>1</sub>, r<sub>2</sub>) falls in the interval [-1 1]. If the cross-spectral density for the baseline is 0 the connectivity value will be 1. If the cross-spectral density for the signal is 0 the connectivity value will be -1. | where c<sub>s</sub><sup>sig</sup> is the cross-spectral density for the signal of interest between the two brain regions r<sub>1</sub> and r<sub>2</sub>, and c<sub>s</sub><sup>bl</sup> is the corresponding cross spectral density for the baseline or the control condition, respectively. In the case DICS is computed with a cortical reference, r<sub>1</sub> is the reference region (voxel) and remains constant while r<sub>2</sub> scans all the grid points within the brain sequentially. In that way, the connectivity between the reference brain region and all other brain regions is estimated. The value of CON(r<sub>1</sub>, r<sub>2</sub>) falls in the interval [-1 1]. If the cross-spectral density for the baseline is 0 the connectivity value will be 1. If the cross-spectral density for the signal is 0 the connectivity value will be -1. | ||

| + | |||

| + | ¹ Here, the term connectivity is used rather than coherence, as strictly speaking the coherence equation is defined slightly differently. For simplicity reasons the rest of the tutorial uses the term coherence. | ||

| Line 192: | Line 195: | ||

| − | When using an external reference, the equation for coherence calculation is slightly different compared to the equation for cortico-cortical coherence. First of all, the cross-spectral density matrix is not only computed for the MEG/EEG channels, but the external reference channel is added. This resulting matrix is C<sub>all</sub>. In this case, the cross-spectral density between the reference signal and all other MEG/EEG | + | When using an external reference, the equation for coherence calculation is slightly different compared to the equation for cortico-cortical coherence. First of all, the cross-spectral density matrix is not only computed for the MEG/EEG channels, but the external reference channel is added. This resulting matrix is C<sub>all</sub>. In this case, the cross-spectral density between the reference signal and all other MEG/EEG channels is called c<sub>ref</sub>. It is only one column of C<sub>all</sub>. Hence, the cross-spectrum in voxel r is calculated with the following equation: |

| − | |||

| + | <math>C_{s}\left( r \right) = W\left( r \right) \cdot c_{\text{ref}}</math> | ||

| + | <!-- [[Image:SA 3Dimaging (16).gif]] --> | ||

| − | |||

and the corresponding cross-spectral density is calculated as the sum of squares of C<sub>s</sub>: | and the corresponding cross-spectral density is calculated as the sum of squares of C<sub>s</sub>: | ||

| − | [[Image:SA 3Dimaging (17).gif]] | + | |

| + | <math>c_{s}\left( r \right) = \sum_{i = 1}^{n}{C_{s}\left( r \right)_{i}^{2}},</math> | ||

| + | <!-- [[Image:SA 3Dimaging (17).gif]] --> | ||

| + | |||

where n is 2 for MEG and 3 for EEG. This equation can also be described as the squared Euclidean norm of the cross-spectrum: | where n is 2 for MEG and 3 for EEG. This equation can also be described as the squared Euclidean norm of the cross-spectrum: | ||

| − | [[Image:SA 3Dimaging (18).gif]] | + | |

| + | <math>c_{s}\left( r \right) = \left\| C_{s} \right\|^{2},</math> | ||

| + | <!-- [[Image:SA 3Dimaging (18).gif]] --> | ||

| Line 211: | Line 219: | ||

| − | [[Image:SA 3Dimaging (19).gif]] | + | <math>p\left( r \right) = \lambda_{1}\left\{ C_{s}(r,r) \right\}.</math> |

| + | <!-- [[Image:SA 3Dimaging (19).gif]] --> | ||

| + | |||

At last, coherence between the external reference and cortical activity is calculated with the equation: | At last, coherence between the external reference and cortical activity is calculated with the equation: | ||

| − | [[Image:SA 3Dimaging (20).gif]] | + | |

| + | <math>\text{CON}\left( r \right) = \frac{c_{s}(r)}{p\left( r \right) \cdot C_{\text{all}}(k,k)},</math> | ||

| + | <!-- [[Image:SA 3Dimaging (20).gif]] --> | ||

| Line 228: | Line 240: | ||

'''Starting DICS computation from the Time-Frequency Window''' | '''Starting DICS computation from the Time-Frequency Window''' | ||

| − | |||

DICS is particularly useful, if coherence in a user-defined time-frequency bin (evoked or induced) is to be calculated between any two brain regions or between an external reference and the brain. DICS runs only on time-frequency decomposed data, so time-frequency analysis needs to be run before starting DICS computation. | DICS is particularly useful, if coherence in a user-defined time-frequency bin (evoked or induced) is to be calculated between any two brain regions or between an external reference and the brain. DICS runs only on time-frequency decomposed data, so time-frequency analysis needs to be run before starting DICS computation. | ||

| − | To start the DICS computation, left-drag a window over a selected time-frequency bin in the Time-Frequency Window. Right-click and select “Image”. A dialogue will open (see fig. 1) prompting you to specify time and frequency settings as well as the baseline period. It is recommended to use a baseline period of equal length as the data period of interest. Make sure to select “DICS” in the top row and press | + | To start the DICS computation, left-drag a window over a selected time-frequency bin in the Time-Frequency Window. Right-click and select “Image”. A dialogue will open (see fig. 1) prompting you to specify time and frequency settings as well as the baseline period. It is recommended to use a baseline period of equal length as the data period of interest. Make sure to select “DICS” in the top row and press “<span style="color:#3366ff;">'''Go'''</span>”. |

| − | + | [[Image:SA 3Dimaging (21).gif|450px|thumb|c|none|Fig. 1: Time and frequency settings for DICS and MSBF]] | |

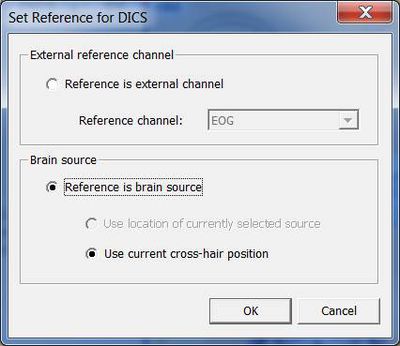

| − | + | Next, a window will appear allowing you to specify the reference source for coherence calculation (see fig. 2). It is possible to select a channel (e.g. EMG) or a brain source. If a brain source is chosen and no source analysis was computed beforehand, the option “Use current cross-hair position” must be chosen. In case discrete source analysis was computed previously, the selected source can be chosen as the reference for DICS. Please note that DICS can be re-computed with any cross-hair or source position at a later stage. | |

| + | [[Image:SA 3Dimaging (1).jpg|400px|thumb|c|none|Fig. 2: Possible options for choosing the reference]] | ||

| − | |||

| + | Confirming with “<span style="color:#3366ff;">'''OK'''</span>” will start computation of coherence between the selected channel/voxel and all other brain voxels. In case DICS is computed for a reference source in the brain, it can be advantageous to run a beamforming analysis in the selected time-frequency window first and use one of the beamforming maxima as reference for DICS. Fig. 3 shows an example for DICS calculation. | ||

| − | + | [[Image:SA 3Dimaging (22).gif|500px|thumb|c|none|Fig. 3: Coherence between left-hemispheric auditory areas and the selected voxel in the right auditory cortex.]] | |

| − | + | Coherence values range between -1 and 1. If coherence in the signal is much larger than coherence in the baseline (control condition) then the DICS value is going to approach 1. Contrary, if coherence in the baseline is much larger than coherence in the signal, then the DICS value is going to approach -1. At last, if coherence in the signal is equal to coherence in the baseline, then the DICS value is 0. | |

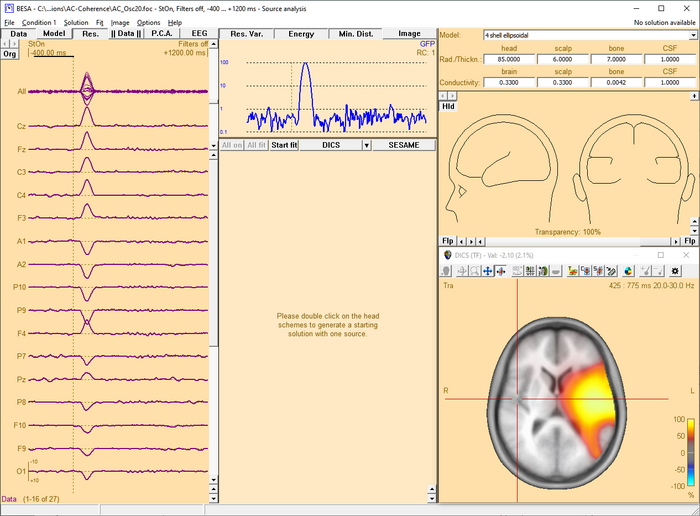

| + | In case DICS is to be re-computed with a different reference, simply mark the desired reference position by placing the cross-hair in the anatomical view and select “DICS” in the middle panel of the source analysis window (see Fig. 4). In case an external reference is to be selected, click on “DICS” in the middle panel to bring up the DICS dialogue (see. Fig. 2) and select the desired channel. Please note that DICS computation will only be available after running time-frequency analysis. | ||

| − | + | [[Image:SA 3Dimaging (23).png|700px|thumb|c|none|Fig. 4: Integration of DICS in the Source Analysis window]] | |

| + | == Multiple Source Beamformer (MSBF) in the Time Domain == | ||

| + | ''This feature requires BESA Research 7.0 or higher.'' | ||

| − | + | '''Short mathematical introduction''' | |

| + | Beamforming approach can be also applied in the time domain data. This approach was introduced as linearly constrained minimum variance (LCMV) beamformer (Van Veen et al., 1997). It allows to image evoked activity in a user-defined time range, where time is taken relative to a triggered event, and to estimate source waveforms using the calculated spatial weight at locations of interest. For an implementation of the beamformer in the time domain, data covariance matrices are required, while complex cross spectral density matrices are used for the beamformer approaches in the time-frequency domain as described in the ''[[Source_Analysis_3D_Imaging#Multiple_Source_Beamformer_.28MSBF.29_in_the_Time-frequency_Domain|Multiple Source Beamformer (MSBF) in the Time-frequency Domain]]'' section. | ||

| − | [[ | + | The bilateral beamformer introduced in the ''[[Source_Analysis_3D_Imaging#Multiple_Source_Beamformer_.28MSBF.29_in_the_Time-frequency_Domain|Multiple Source Beamformer (MSBF) in the Time-frequency Domain]]'' section is also implemented for the time-domain beamformer to take into account contributions from the homologue source in the opposite. This allows for imaging of highly correlated bilateral activity in the two hemispheres that commonly occurs during processing of external stimuli. In addition, the beamformer computation can take into account possibly correlated sources at arbitrary locations. |

| − | + | The beamformer spatial weight W(r) for the voxel r in the brain is defined as follows (Van Veen et al., 1997): | |

| − | |||

| − | + | <math>\mathrm{W}(r) = [\mathrm{L}^T(r)\mathrm{C}^{-1}\mathrm{L}(r)]^{-1}\mathrm{L}^T(r)\mathrm{C}^{-1}</math> | |

| + | <!-- [[File:MSBF1.png]] --> | ||

| − | + | where <math>\mathrm{C}^{-1}</math> is the inversed regularized average of covariance matrix over trials, '''L''' is the leadfield matrix of the model containing a regional source at target location r and optionally additional sources whose interference with the target source is to be minimized. The beamformer spatial weight '''W'''(r) can be applied to the measured data to estimate source waveform at a location r (beamformer virtual sensor): | |

| − | + | <math>\mathrm{S}(r,t) = \mathrm{W}(r)\mathrm{M}(t)</math> | |

| + | <!-- [[File:MSBF2.png]] --> | ||

| − | + | where '''S'''(r,t) represents the estimated source waveform and '''M'''(t) represents measured EEG or MEG signals. The output power P of the beamformer for a specific brain region at location r is computed by the following equation: | |

| − | |||

| − | = | + | <math>\mathrm{P}(r) = \operatorname{tr^{'}}[\mathrm{W}(r) \cdot \mathrm{C} \cdot \mathrm{W}^T(r)]</math> |

| + | <!-- [[File:MSBF3.png]] --> | ||

| − | |||

| − | + | where tr’[ ] is the trace of the [3×3] (MEG: [2×2]) submatrix of the bracketed expression that corresponds to the source at target location r. | |

| − | + | Beamformer can suppress noise sources that are correlated across sensors. However, uncorrelated noise will be amplified in a spatially non-uniform manner, with increasing distortion with increasing distance from the sensors (Van Veen et al., 1997; Sekihara et al., 2001). For this reason, estimated source power should be normalized by a noise power. In BESA Research, the output power P(r) is normalized with the output power in a baseline interval or with the output power of a uncorrelated noise: P(r) / Pref (r). | |

| − | + | ||

| − | + | ||

| − | + | ||

| + | The time-domain beamformer image is constructed from values q(r) computed for all locations on a grid specified in the '''<u>General Settings</u>''' tab. A value q(r) is defined as described in | ||

| + | the ''[[Source_Analysis_3D_Imaging#Multiple_Source_Beamformer_.28MSBF.29_in_the_Time-frequency_Domain|Multiple Source Beamformer (MSBF) in the Time-frequency Domain]]'' section with data covariance matrices instead of cross-spectral density matrices. | ||

| − | |||

| − | + | '''Applying the Beamformer''' | |

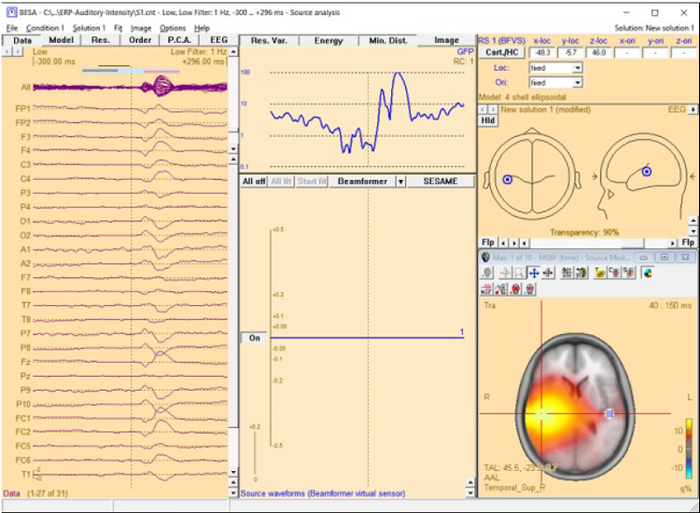

| + | This chapter illustrates the usage of the BESA beamformer in the time domain. The displayed figures are generated using the file ‘Examples/ERP-Auditory-Intensity/S1.cnt’. | ||

| − | '' | + | '''''Starting the time-domain beamformer from the Average tab of the Paradigm dialog box''''' |

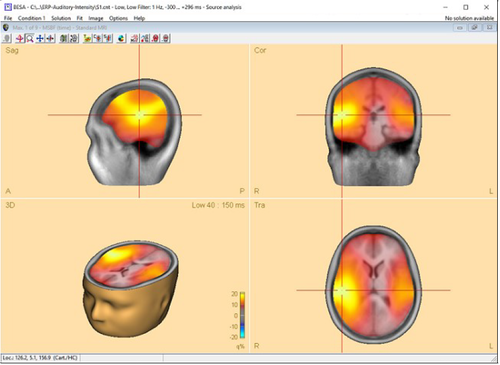

| − | + | The time-domain beamformer is needed data covariance matrices and therefore requires the ERP module to be enabled. After the beamformer computation has been initiated in the '''<u>Average tab of the Paradigm dialog box</u>''', the source analysis window opens with an enlarged 3D image of the q-value computed with a bilateral beamformer. The result is superimposed onto the MR image assigned to the data set (individual or standard). | |

| + | [[File:MSBF4.png|500px|thumb|c|none|Beamformer image for auditory evoked data after starting the computation in the '''<u>Average tab of the Paradigm dialog box'''</u>. The bilateral beamformer manages to separate the activities in auditory areas, while a traditional single-source beamformer would merge the two sources into one image maximum in the head center instead.]] | ||

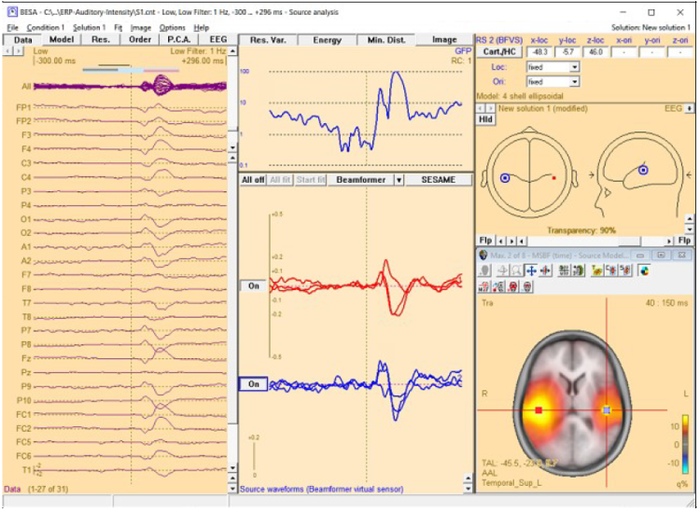

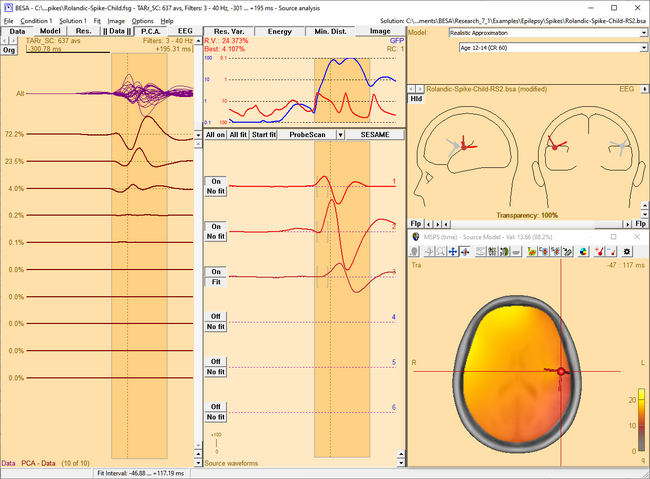

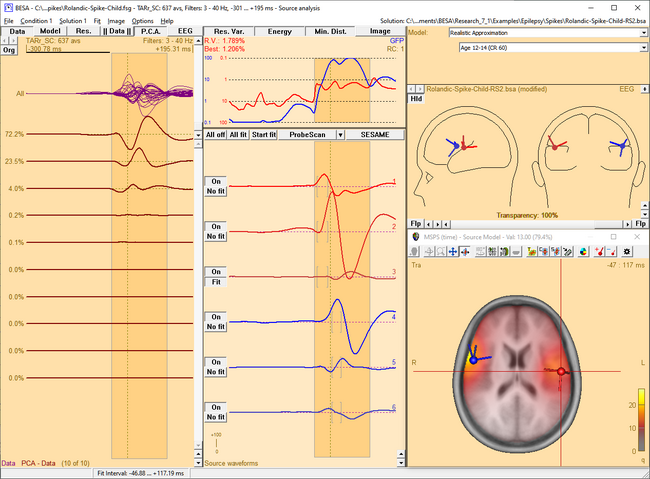

| − | '' | + | '''''Multiple-source beamformer in the Source Analysis window''''' |

| − | [[Image:SA 3Dimaging ( | + | The 3D imaging display is part of the source analysis window. In the Channel box, the averaged (evoked) data of the selected condition is shown. Selected covariance intervals in the ERP module can be checked in the Channel box. The red, gray, and blue rectangles indicate signal, baseline, and common interval, respectively. |

| + | |||

| + | [[File:MSBF55.png|700px|thumb|c|none|Source Analysis window with beamformer image. The two beamformer virtual sensors have been added using the Switch to Maximum and Add Source toolbar buttons (see below). | ||

| + | Source waveforms are computed using the beamformer spatial weights and the displayed averaged data (the noise normalized weights (5% noise) option was used to compute the beamformer image).]] | ||

| + | |||

| + | |||

| + | When starting the beamformer from the '''<u>Average tab of the Paradigm dialog box</u>''', the bilateral beamformer scan is performed. In the source analysis window, the beamformer computation can be repeated taking into account possibly correlated sources that are specified in the current solution. Interfering activities generated by all sources in the current solution that are in the 'On' state are specifically suppressed (they enter the leadfield matrix L in the beamformer calculation). The computation can be started from the '''<u>Image</u>''' menu or from the Image selector button [[File:MSBF_Button.png|22px|Image: 22 pixels]] dropdown menu. The Image menu can be evoked either from the menu bar or by right-clicking anywhere in the source analysis window. | ||

| + | |||

| + | [[File:MSBF66.png|700px|thumb|c|none|Multiple-source beamformer image calculated in the presence of a source in the left hemisphere. A single-source scan has been performed instead of a bilateral beamforemr. The source set in the current solution accounts for the left-hemispheric q-maximum in the data. Accordingly, the beamformer scan reveals only the as yet unmodeled additional activity in the right hemisphere (note the radiological convention in the 3D image display). The source waveform of the beamformer virtual sensor in the left hemisphere is not shown since the location (blue square in the figure) is not considered for the multiple-source beamformer.]] | ||

| + | |||

| + | |||

| + | The beamformer scan can be performed with a single or a bilateral source scan. The default scan type depends on the current solution: | ||

| + | |||

| + | When the beamformer is started from the '''<u>Average tab of the Paradigm dialog box</u>''' the Source Analysis window opens with a new solution and a bilateral beamformer scan is performed. | ||

| + | |||

| + | When the beamformer is started within the Source Analysis window, the default is: | ||

| + | * a scan with a single source in addition to the sources in the current solution, if at least one source is active. | ||

| + | * a bilateral scan if no source in the current solution is active. | ||

| + | * a scan with a single source when scalar-type beamformer is selected in the '''<u>beamformer option dialog box</u>'''. | ||

| + | |||

| + | The default scan type is the multiple source beamformer. The non-default scan type can be enforced using the corresponding Volume Image / Beamformer entry in the Image main | ||

| + | menu or in the beamformer option dialog box (only for the time-domain beamformer). | ||

| + | |||

| + | |||

| + | '''Inserting Sources as Beamformer Virtual Sensor out of the Beamformer Image''' | ||

| + | |||

| + | This is similar to the inserting sources out of the beamformer image in Multiple Source Beamformer (MSBF) in the Time-frequency Domain section. | ||

| + | |||

| + | The beamformer image can be used to add beamformer virtual sensors to the current solution. A simple double-click anywhere in the 3D view (not in the 2D view) will generate a source at the corresponding location. A better and easier way to create sources at image maxima and minima is to use the toolbar buttons <span style="color:#3366ff;">'''Switch to Maximum'''</span> [[Image:SA 3Dimaging (8).gif]] and <span style="color:#3366ff;">'''Add Source'''</span> [[Image:SA 3Dimaging (9).gif]]. | ||

| + | |||

| + | This feature allows to use the beamformer as a tool to create a source montage for '''<u>source coherence</u>''' analysis. A source montage file (*.mtg) for beamformer virtual sensors can | ||

| + | be saved using File \ Save Source Montage As… entry. | ||

| + | |||

| + | The time-domain beamformer image can be also used to add regional or dipole sources to the current solution. Press '''N''' key when there is no source in the current source array or there is more than one beamformer virtual sensor. To create a new source array for beamformer virtual sensor, press '''N''' key when there is more than one regional or dipole source in the current source array. | ||

| + | |||

| + | |||

| + | '''Notes''' | ||

| + | |||

| + | * You can hide or re-display the last computed image by selecting ''Hide Image'' entry in the '''<u>Image</u>''' menu. | ||

| + | * The current image can be exported to ASCII, ANALYZE, or BrainVoyager (*.vmp) format from the '''<u>Image</u>''' menu. | ||

| + | * For scaling options, use [[Image:SA 3Dimaging (10).gif]] and [[Image:SA 3Dimaging (11).gif]] <span style="color:#3366ff;">'''Image Scale toolbar'''</span> buttons. | ||

| + | * Parameters used for the beamformer calculations can be set in the '''Standard Volume tab of the Image Settings <u>dialog box</u>'''. | ||

| + | * Note that Model, Residual, Order, and Residual variance are not shown for the beamformer virtual sensor type sources. | ||

| + | |||

| + | |||

| + | '''References''' | ||

| + | |||

| + | * Sekihara, K., Nagarajan, S. S., Poeppel, D., Marantz, A., & Miyashita, Y. (2001). Reconstructing spatio-temporal activities of neural sources using an MEG vector beamformer technique. IEEE Transactions on Biomedical Engineering, 48(7), 760–771. | ||

| + | |||

| + | * Van Veen, B. D., Van Drongelen, W., Yuchtman, M., & Suzuki, A. (1997). Localization of brain electrical activity via linearly constrained minimum variance spatial filtering. IEEE Transactions on Biomedical Engineering, 44(9), 867–880 | ||

| + | |||

| + | == CLARA == | ||

| + | |||

| + | CLARA ('Classical LORETA Analysis Recursively Applied') is an iterative application of weighted LORETA images with a reduced source space in each iteration. | ||

| + | |||

| + | In an initialization step, a LORETA image is calculated. Then in each iteration the following steps are performed: | ||

| + | |||

| + | # The obtained image is spatially smoothed (this step is left out in the first iteration). | ||

| + | # All grid points with amplitudes below a threshold of 1% of the maximum activity are set to zero, thus being effectively eliminated from the source space in the following step. | ||

| + | # The resulting image defines a spatial weighting term (for each voxel the corresponding image amplitude). | ||

| + | # A LORETA image is computed with an additional spatial weighting term for each voxel as computed in step 3. By the default settings in BESA Research, the regularization values used in the iteration steps are slightly higher than that of the initialization LORETA image. | ||

| + | |||

| + | |||

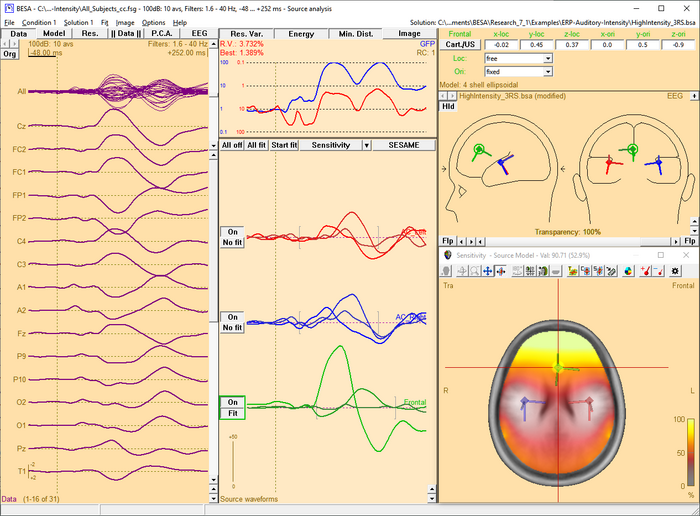

| + | The procedure stops after 2 iterations, and the image computed in the last iteration is displayed. Please note that you can change all parameters by creating a user-defined volume image. | ||

| + | The advantage of CLARA over non-focusing distributed imaging methods is visualized by the figure below. Both images are computed from the N100 response in an auditory oddball experiment (file <span style="color:#ff9c00;">'''Oddball.fsg'''</span> in subfolder ''fMRI+EEG-RT-Experiment'' of the ''Examples'' folder). The CLARA image is much more focal than the sLORETA image, making it easier to determine the location of the image maxima. | ||

| + | <div><ul> | ||

| + | <li style="display: inline-block;"> [[File:SA 3Dimaging (24).gif|thumb|350px|sLORETA image]] </li> | ||

| + | <li style="display: inline-block;"> [[File:SA 3Dimaging (25).gif|thumb|350px|CLARA image]] </li> | ||

| + | </ul></div> | ||

'''Notes:''' | '''Notes:''' | ||

* Starting CLARA: CLARA can be started from the <span style="color:#3366ff;">'''Image'''</span> menu or from the <span style="color:#3366ff;">'''Image Selection'''</span> button. | * Starting CLARA: CLARA can be started from the <span style="color:#3366ff;">'''Image'''</span> menu or from the <span style="color:#3366ff;">'''Image Selection'''</span> button. | ||

| − | * Please refer to '' | + | * Please refer to Chapter ''[[Source_Analysis_3D_Imaging#Regularization_of_distributed_volume_images|Regularization of distributed volume images]]'' for important information on regularization of distributed inverses. |

| + | == LAURA == | ||

| − | + | LAURA (Local Auto Regressive Average) belongs to the distributed inverse method of the family of weighted minimum norm methods ([https://doi.org/10.1023/A:1012944913650 Grave de Peralta Menendeza et al., "Noninvasive Localization of Electromagnetic Epileptic Activity. I. Method Descriptions and Simulations", BrainTopography 14(2), 131-137, 2001]). LAURA uses a spatial weighting function that includes depth weighting and that term has the form of a local autoregressive function. | |

| − | + | ||

| − | LAURA ( | + | |

The source activity is estimated by applying the general formula for a weighted minimum norm: | The source activity is estimated by applying the general formula for a weighted minimum norm: | ||

| − | [[Image:SA 3Dimaging (26).gif]] | + | <math>\mathrm{S}\left( t \right) = \mathrm{V} \cdot \mathrm{L}^{T}\left( \mathrm{L} \cdot \mathrm{V} \cdot \mathrm{L}^{T} \right)^{- 1} \cdot \mathrm{D}(t)</math> |

| + | <!-- [[Image:SA 3Dimaging (26).gif]] --> | ||

| Line 323: | Line 407: | ||

| − | [[Image:SA 3Dimaging (27).gif]] | + | <math>\mathrm{V} = \left( \mathrm{U}^{T} \cdot \mathrm{U} \right)^{-1}</math> |

| + | <!-- [[Image:SA 3Dimaging (27).gif]] --> | ||

| − | + | where | |

| − | [[Image:SA 3Dimaging (28).gif]] | + | <math>\mathrm{U} = \left( \mathrm{W} \cdot \mathrm{A} \right) \otimes \mathrm{I}_{3}</math> |

| + | <!-- [[Image:SA 3Dimaging (28).gif]] --> | ||

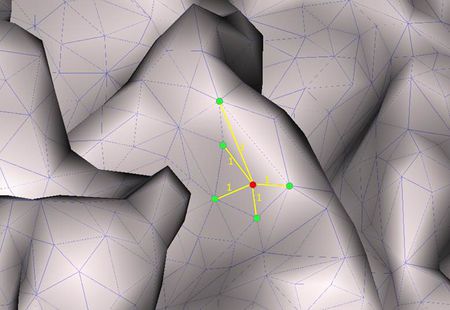

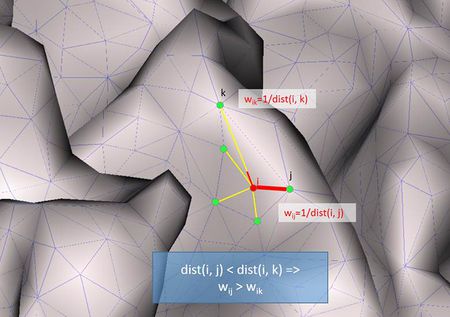

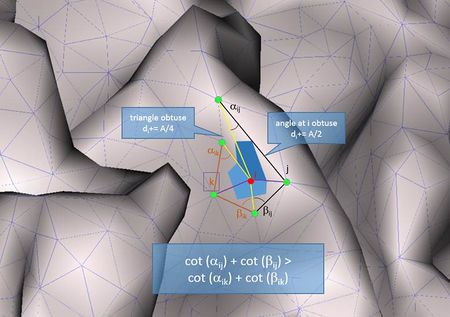

| − | Here, | + | Here, <math>\otimes</math> denotes the Kronecker product. I<sub>3</sub> is the [3×3] identity matrix. W is an [s×s] diagonal matrix (with s the number of source locations on the grid), where each diagonal element is the inverse of the maximum singular value of the corresponding regional source's leadfields. The formula for the diagonal components A<sub>ii</sub> and the off-diagonal components A<sub>ik</sub> are as follows: |

| − | [[Image:SA 3Dimaging (29).gif]] | + | <math>\mathrm{A}_{ii} = \frac{26}{\mathrm{N}_{i}}\sum_{k \subset V_{i}}^{}\frac{1}{\mathrm{d}_{ik}^{2}}</math> |

| + | <!-- [[Image:SA 3Dimaging (29).gif]] --> | ||

| − | [[Image:SA 3Dimaging (30).gif]] | + | <math> |

| + | \mathrm{A}_{ik} = | ||

| + | \begin{cases} | ||

| + | - 1/\operatorname{dist}\left( i,k \right)^{2}, & \text{if } k \subset V_{i} \\ | ||

| + | 0, & \text{otherwise} | ||

| + | \end{cases} | ||

| + | </math> | ||

| + | <!-- [[Image:SA 3Dimaging (30).gif]] --> | ||

| Line 348: | Line 442: | ||

* '''Grid spacing:''' Due to memory limitations, LAURA images require a grid spacing of 7 mm or more. | * '''Grid spacing:''' Due to memory limitations, LAURA images require a grid spacing of 7 mm or more. | ||

| − | * '''Computation time:''' Computation speed during the first LAURA image calculation depends on the grid spacing (computation is faster with larger grid spacing). After the first computation of a LAURA image, a <span style="color:#ff9c00;">''' | + | * '''Computation time:''' Computation speed during the first LAURA image calculation depends on the grid spacing (computation is faster with larger grid spacing). After the first computation of a LAURA image, a <span style="color:#ff9c00;">'''*.laura'''</span> file is stored in the data folder, containing intermediate results of the LAURA inverse. This file is used during all subsequent LAURA image computations. Thereby, the time needed to obtain the image is substantially reduced. |

* '''MEG:''' In the case of MEG data, an additional constraint is implemented in the LAURA algorithm that prevents solutions from containing radial source currents (compare Pascual-Marqui, ISBET Newsletter 1995, 22-29). In MEG, an additional source space regularization is necessary in the inverse matrix operation required compute V | * '''MEG:''' In the case of MEG data, an additional constraint is implemented in the LAURA algorithm that prevents solutions from containing radial source currents (compare Pascual-Marqui, ISBET Newsletter 1995, 22-29). In MEG, an additional source space regularization is necessary in the inverse matrix operation required compute V | ||

* '''Starting LAURA:''' LAURA can be started from the<span style="color:#3366ff;">''' Image'''</span> menu or from the <span style="color:#3366ff;">'''Image Selection'''</span> button. | * '''Starting LAURA:''' LAURA can be started from the<span style="color:#3366ff;">''' Image'''</span> menu or from the <span style="color:#3366ff;">'''Image Selection'''</span> button. | ||

* '''Regularization:''' Please refer to Chapter'' “Regularization of distributed volume images” ''for important information on regularization of distributed inverses. | * '''Regularization:''' Please refer to Chapter'' “Regularization of distributed volume images” ''for important information on regularization of distributed inverses. | ||

| − | + | == LORETA == | |

| − | + | ||

| − | + | ||

LORETA ("Low Resolution Electromagnetic Tomography") is a distributed inverse method of the family of ''weighted minimum norm'' methods. LORETA was suggested by R.D. Pascual-Marqui (International Journal of Psychophysiology. 1994, 18:49-65). LORETA is characterized by a smoothness constraint, represented by a discrete 3D Laplacian. | LORETA ("Low Resolution Electromagnetic Tomography") is a distributed inverse method of the family of ''weighted minimum norm'' methods. LORETA was suggested by R.D. Pascual-Marqui (International Journal of Psychophysiology. 1994, 18:49-65). LORETA is characterized by a smoothness constraint, represented by a discrete 3D Laplacian. | ||

| Line 361: | Line 453: | ||

The source activity is estimated by applying the general formula for a weighted minimum norm: | The source activity is estimated by applying the general formula for a weighted minimum norm: | ||

| − | [[Image:SA 3Dimaging (26).gif]] | + | |

| + | <math>\mathrm{S}\left( t \right) = \mathrm{V} \cdot \mathrm{L}^{T}\left( \mathrm{L} \cdot \mathrm{V} \cdot \mathrm{L}^{T} \right)^{- 1} \cdot \mathrm{D}(t)</math> | ||

| + | <!-- [[Image:SA 3Dimaging (26).gif]] --> | ||

| + | |||

Here, L is the leadfield matrix of the distributed source model with regional sources distributed on a regular cubic grid. D(t) is the data at time point t. The term in parentheses is generally regularized. Regularization parameters can be specified in the ''Image Settings.'' | Here, L is the leadfield matrix of the distributed source model with regional sources distributed on a regular cubic grid. D(t) is the data at time point t. The term in parentheses is generally regularized. Regularization parameters can be specified in the ''Image Settings.'' | ||

| Line 367: | Line 462: | ||

In LORETA, V contains both a depth weighting term and a representation of the 3D Laplacian matrix. V is computed as: | In LORETA, V contains both a depth weighting term and a representation of the 3D Laplacian matrix. V is computed as: | ||

| − | |||

| − | + | <math>\mathrm{V} = \left( \mathrm{U}^{T} \cdot \mathrm{U} \right)^{- 1}</math> | |

| + | <!-- [[Image:SA 3Dimaging (27).gif]] --> | ||

| − | |||

| − | Here, | + | where |

| + | |||

| + | |||

| + | <math>\mathrm{U} = \left( \mathrm{W} \cdot \mathrm{A} \right) \otimes \mathrm{I}_{3}</math> | ||

| + | <!-- [[Image:SA 3Dimaging (28).gif]] --> | ||

| + | |||

| + | |||

| + | Here, <math>\otimes</math> denotes the Kronecker product. I<sub>3</sub> is the [3x3] identity matrix. W is an [sxs] diagonal matrix (with s the number of source locations on the grid), where each diagonal element is the inverse of the maximum singular value of the corresponding regional source's leadfields. A contains the 3D Laplacian and is computed as | ||

| + | |||

| + | |||

| + | <math>\mathrm{A} = \mathrm{Y} - \mathrm{I}_{s}</math> | ||

| + | <!-- [[Image:SA 3Dimaging (31).gif]] --> | ||

| − | |||

with I<sub>s</sub> the [sxs] identity matrix, where s is the number of sources (= three times the number of grid points) and | with I<sub>s</sub> the [sxs] identity matrix, where s is the number of sources (= three times the number of grid points) and | ||

| − | |||

| − | + | <math>\mathrm{Y} = \frac{1}{2}\left\{ \mathrm{I}_{s} + \left\lbrack \operatorname{diag}\left( \mathrm{Z} \cdot \left\lbrack 111 \ldots 1 \right\rbrack^{T} \right) \right\rbrack^{- 1} \right\} \cdot \mathrm{Z}</math> | |

| + | <!-- [[Image:SA 3Dimaging (32).gif]] --> | ||

| + | |||

| + | |||

| + | where | ||

| + | |||

| + | |||

| + | <math> | ||

| + | \mathrm{Z}_{ik} = | ||

| + | \begin{cases} | ||

| + | 1/6, & \text{if } \operatorname{dist}\left( i,k \right) = 1 \text{ grid point} \\ | ||

| + | 0, & \text{otherwise} | ||

| + | \end{cases} | ||

| + | </math> | ||

| + | <!-- [[Image:SA 3Dimaging (33).gif]] --> | ||

| − | |||

The LORETA image in BESA Research displays the norm of the 3 components of S at each location r. Using the menu function ''Image / Export Image As... ''you have the option to save this norm of S or alternatively all components separately to disk. | The LORETA image in BESA Research displays the norm of the 3 components of S at each location r. Using the menu function ''Image / Export Image As... ''you have the option to save this norm of S or alternatively all components separately to disk. | ||

| Line 394: | Line 510: | ||

* '''Regularization:''' Please refer to Chapter “''Regularization of distributed volume images”'' for important information on regularization of distributed source models. | * '''Regularization:''' Please refer to Chapter “''Regularization of distributed volume images”'' for important information on regularization of distributed source models. | ||

| + | == sLORETA == | ||

| + | This distributed inverse method consists of a ''standardized, unweighted minimum norm''. The method was originally suggested by R.D. Pascual-Marqui (Methods & Findings in Experimental & Clinical Pharmacology 2002, 24D:5-12) Starting point is an unweighted minimum norm computation: | ||

| − | |||

| − | + | <math>\mathrm{S}_{\text{MN}}\left( t \right) = \mathrm{L}^{T} \cdot \left( \mathrm{L} \cdot \mathrm{L}^{T} \right)^{- 1} \cdot \mathrm{D}(t)</math> | |

| + | <!-- [[Image:SA 3Dimaging (34).gif]] --> | ||

| − | |||

Here, L is the leadfield matrix of the distributed source model with regional sources distributed on a regular cubic grid. D(t) is the data at time point t. The term in parentheses is generally regularized. Regularization parameters can be specified in the ''Image Settings''. | Here, L is the leadfield matrix of the distributed source model with regional sources distributed on a regular cubic grid. D(t) is the data at time point t. The term in parentheses is generally regularized. Regularization parameters can be specified in the ''Image Settings''. | ||

| Line 406: | Line 523: | ||

This minimum norm estimate is now standardized to produce the sLORETA activity at a certain brain location r: | This minimum norm estimate is now standardized to produce the sLORETA activity at a certain brain location r: | ||

| − | [[Image:SA 3Dimaging (35).gif]] | + | |

| + | <math>\mathrm{S}_{\text{sLORETA}, r} = \mathrm{R}_{rr}^{-1/2} \cdot \mathrm{S}_{\text{MN},r}</math> | ||

| + | <!-- [[Image:SA 3Dimaging (35).gif]] --> | ||

| + | |||

S<sub>sMN,r </sub>is the [3x1] (MEG: [2x1]) minimum norm estimate of the 3 (MEG: 2) dipoles at location r. R<sub>rr</sub> is the [3x3] (MEG: [2x2]) diagonal block of the resolution matrix R that corresponds to the source components at the target location r. The resolution matrix is defined as: | S<sub>sMN,r </sub>is the [3x1] (MEG: [2x1]) minimum norm estimate of the 3 (MEG: 2) dipoles at location r. R<sub>rr</sub> is the [3x3] (MEG: [2x2]) diagonal block of the resolution matrix R that corresponds to the source components at the target location r. The resolution matrix is defined as: | ||

| − | |||

| − | The sLORETA image in BESA Research displays the norm of S<sub>sLORETA</sub>, r at each location r. Using the menu function ''Image / Export Image As...'' you have the option to save this norm of S<sub>sLORETA</sub>, r or alternatively all components separately to disk. | + | <math>\mathrm{R} = \mathrm{L}^{T} \cdot \left( \mathrm{L} \cdot \mathrm{L}^{T} + \lambda \cdot \mathrm{I} \right)^{-1} \cdot \mathrm{L}</math> |

| + | <!-- [[Image:SA 3Dimaging (36).gif]] --> | ||

| + | |||

| + | |||

| + | The sLORETA image in BESA Research displays the norm of S<sub>sLORETA</sub>, <sub>r</sub> at each location r. Using the menu function ''Image / Export Image As...'' you have the option to save this norm of S<sub>sLORETA</sub>, <sub>r</sub> or alternatively all components separately to disk. | ||

| Line 418: | Line 541: | ||

* sLORETA can be started from the <span style="color:#3366ff;">'''Image'''</span> menu or from the <span style="color:#3366ff;">'''Image Selection'''</span> button. | * sLORETA can be started from the <span style="color:#3366ff;">'''Image'''</span> menu or from the <span style="color:#3366ff;">'''Image Selection'''</span> button. | ||

| − | * Please refer to Chapter | + | * Please refer to Chapter [[#Regularization_of_distributed_volume_images|''Regularization of distributed volume images'']] for important information on regularization of distributed inverses. |

| − | + | ||

| − | + | == swLORETA == | |

This distributed inverse method is a ''standardized, depth-weighted minimum norm'' (E. Palmero-Soler et al 2007 Phys. Med. Biol. 52 1783-1800). It differs from sLORETA only by an additional depth weighting. | This distributed inverse method is a ''standardized, depth-weighted minimum norm'' (E. Palmero-Soler et al 2007 Phys. Med. Biol. 52 1783-1800). It differs from sLORETA only by an additional depth weighting. | ||

| Line 427: | Line 549: | ||

Starting point is a depth-weighted minimum norm computation: | Starting point is a depth-weighted minimum norm computation: | ||

| − | [[Image:SA 3Dimaging (37).gif]] | + | |

| + | <math>\mathrm{S}_{\text{MN}}\left( t \right) = \mathrm{V} \cdot \mathrm{L}^{T} \cdot \left( \mathrm{L} \cdot \mathrm{V} \cdot \mathrm{L}^{T} \right)^{-1} \cdot \mathrm{D}(t)</math> | ||

| + | <!-- [[Image:SA 3Dimaging (37).gif]] --> | ||

| Line 436: | Line 560: | ||

This minimum norm estimate is now standardized to produce the swLORETA activity at a certain brain location r: | This minimum norm estimate is now standardized to produce the swLORETA activity at a certain brain location r: | ||

| − | [[Image:SA 3Dimaging (38).gif]] | + | |

| + | <math>\mathrm{S}_{\text{swLORETA},r} = \mathrm{R}_{rr}^{-1/2} \cdot \mathrm{S}_{\text{MN},r}</math> | ||

| + | <!-- [[Image:SA 3Dimaging (38).gif]] --> | ||

| + | |||

S<sub>sMN,r</sub> is the [3x1] (MEG: [2x1]) depth-weighted minimum norm estimate of the regional source at location r. R<sub>rr</sub> is the [3x3] (MEG: [2x2]) diagonal block of the resolution matrix R that corresponds to the source components at the target location r. The resolution matrix is defined as: | S<sub>sMN,r</sub> is the [3x1] (MEG: [2x1]) depth-weighted minimum norm estimate of the regional source at location r. R<sub>rr</sub> is the [3x3] (MEG: [2x2]) diagonal block of the resolution matrix R that corresponds to the source components at the target location r. The resolution matrix is defined as: | ||

| − | |||

| − | The swLORETA image in BESA Research displays the norm of S<sub>swLORETA</sub>, r at each location r. Using the menu function ''Image / Export Image As...'' you have the option to save this norm of S<sub>swLORETA</sub>, r or alternatively all components separately to disk. | + | <math>\mathrm{R} = \mathrm{V} \cdot \mathrm{L}^{T} \cdot \left( \mathrm{L} \cdot \mathrm{V} \cdot \mathrm{L}^{T} + \lambda \cdot \mathrm{I} \right)^{-1} \cdot \mathrm{L}</math> |

| + | <!-- [[Image:SA 3Dimaging (39).gif]] --> | ||

| + | |||

| + | |||

| + | The swLORETA image in BESA Research displays the norm of S<sub>swLORETA</sub>, <sub>r</sub> at each location r. Using the menu function ''Image / Export Image As...'' you have the option to save this norm of S<sub>swLORETA</sub>, <sub>r</sub> or alternatively all components separately to disk. | ||

| Line 450: | Line 580: | ||

* Please refer to Chapter “''Regularization of distributed volume images”'' for important information on regularization of distributed inverses. | * Please refer to Chapter “''Regularization of distributed volume images”'' for important information on regularization of distributed inverses. | ||

| + | == sSLOFO == | ||

| + | SSLOFO (standardized shrinking LORETA-FOCUSS) is an iterative application of weighted distributed source images with a reduced source space in each iteration ([https://dx.doi.org/10.1109/TBME.2005.855720 Liu et al., "Standardized shrinking LORETA-FOCUSS (SSLOFO): a new algorithm for spatio-temporal EEG source reconstruction", IEEE Transactions on Biomedical Engineering 52(10), 1681-1691, 2005]). | ||

| − | + | In an initialization step, an [[#sLORETA | sLORETA]] image is calculated. Then in each iteration the following steps are performed: | |

| − | |||

| − | + | # A weighted minimum norm solution is computed according to the formula <math display="inline">\mathrm{S} = \mathrm{V} \cdot \mathrm{L}^{T} \cdot \left( \mathrm{L} \cdot \mathrm{V} \cdot \mathrm{L}^{T} \right)^{-1} \cdot \mathrm{D}</math> <!-- [[Image:SA 3Dimaging (40).gif]] -->. Here, L is the leadfield matrix of the distributed source model with regional sources distributed on a regular cubic grid. D is the data at the time point under consideration. V is a diagonal spatial weighting matrix that is computed in the previous iteration step. In the first iteration, the elements of V contain the magnitudes of the initially computed LORETA image. | |

| − | + | # Standardization of this weighted minimum norm image is performed with the resolution matrix as in [[#sLORETA | sLORETA]]. | |

| − | + | ||

| − | # A weighted minimum norm solution is computed according to the formula | + | |

| − | # Standardization of this weighted minimum norm image is performed with the resolution matrix as in sLORETA. | + | |

# The obtained standardized weighted minimum norm image is being smoothed to get S<sub>smooth</sub>. | # The obtained standardized weighted minimum norm image is being smoothed to get S<sub>smooth</sub>. | ||

# All voxels with amplitudes below a threshold of 1% of the maximum activity get a weight of zero in the next iteration step, thus being effectively eliminated from the source space in the next iteration step. | # All voxels with amplitudes below a threshold of 1% of the maximum activity get a weight of zero in the next iteration step, thus being effectively eliminated from the source space in the next iteration step. | ||

| − | # For all other voxels, compute the elements of the spatial weighting matrix V to be used in the next iteration as follows: | + | # For all other voxels, compute the elements of the spatial weighting matrix V to be used in the next iteration as follows: <math display="inline">\mathrm{V}_{ii,\text{next iteration}} = \frac{1}{\left\| \mathrm{L}_{i} \right\|} \cdot \mathrm{S}_{ii,\text{smooth}} \cdot \mathrm{V}_{ii,\text{current iteration}}</math> |

| + | <!-- [[Image:SA 3Dimaging (41).gif]] --> | ||

| − | |||

| − | + | The procedure stops after 3 iterations. Please note that you can change all parameters by creating a [[#User-Defined Volume Image | user-defined volume image]]. | |

| − | The procedure stops after 3 iterations. Please note that you can change all parameters by creating a user-defined volume image. | + | |

'''Notes:''' | '''Notes:''' | ||

* '''Starting sSLOFO''': sSLOFO can be started from the<span style="color:#3366ff;">''' Image'''</span> menu or from the <span style="color:#3366ff;">'''Image Selection'''</span> button. | * '''Starting sSLOFO''': sSLOFO can be started from the<span style="color:#3366ff;">''' Image'''</span> menu or from the <span style="color:#3366ff;">'''Image Selection'''</span> button. | ||

| − | * Please refer to Chapter | + | * Please refer to Chapter ''[[#Regularization of distributed volume images | Regularization of distributed volume images]]'' for important information on regularization of distributed inverses. |

| − | + | ||

| − | + | == User-Defined Volume Image == | |

In addition to the predefined 3D imaging methods in BESA Research, it is possible to create user-defined imaging methods based on the general formula for distributed inverses: | In addition to the predefined 3D imaging methods in BESA Research, it is possible to create user-defined imaging methods based on the general formula for distributed inverses: | ||

| − | |||

| + | <math>\mathrm{S}\left( t \right) = \mathrm{V} \cdot \mathrm{L}^{T} \cdot \left( \mathrm{L} \cdot \mathrm{V} \cdot \mathrm{L}^{T} \right)^{-1} \cdot \mathrm{D}(t)</math> | ||

| + | <!-- [[Image:SA 3Dimaging (26).gif]] --> | ||

| − | |||

| − | |||

| − | |||

| − | |||

| + | Here, L is the leadfield matrix of the distributed source model with regional sources distributed on a regular cubic grid. D(t) is the data at time point t. Custom-defined parameters are: | ||

| − | All parameters for the user-defined volume image are specified in the User-Defined Volume Tab of the Image Settings dialog box. Please refer to chapter | + | * '''The spatial weighting matrix V''': This may include depth weighting, image weighting, or cross-voxel weighting with a 3D Laplacian (as in LORETA) or an autoregressive function (as in LAURA). |

| + | * '''Regularization''': The term in parentheses is generally regularized. Note that regularization has a strong effect on the obtained results. Please refer to chapter ''Regularization of Distributed Volume Images''for more information. | ||

| + | * '''Standardization''': Optionally, the result of the distributed inverse can be standardized with the resolution matrix (as in sLORETA). | ||

| + | * '''Iterations''': Inverse computations can be applied iteratively. Each iteration is weighted with the image obtained in the previous iteration. | ||

| + | |||

| + | All parameters for the user-defined volume image are specified in the User-Defined Volume Tab of the Image Settings dialog box. Please refer to chapter ''User-Defined Volume Tab'' for details. | ||

'''Notes:''' | '''Notes:''' | ||

| + | |||

* Starting the user-defined volume image: the image calculation can be started from the <span style="color:#3366ff;">'''Image'''</span> menu or from the <span style="color:#3366ff;">'''Image Selection'''</span> button. | * Starting the user-defined volume image: the image calculation can be started from the <span style="color:#3366ff;">'''Image'''</span> menu or from the <span style="color:#3366ff;">'''Image Selection'''</span> button. | ||

| − | * Please refer to Chapter | + | * Please refer to Chapter ''Regularization of distributed volume images'' for important information on regularization of distributed inverses. |

| − | + | == Regularization of distributed volume images == | |

| − | + | ||

| − | + | ||

Distributed source images require the inversion of a term of the form L V L<sup>T</sup>. This term is generally regularized before its inversion. In BESA Research, selection can be made between two different regularization approaches (parameters are defined in the ''Image Settings dialog box''): | Distributed source images require the inversion of a term of the form L V L<sup>T</sup>. This term is generally regularized before its inversion. In BESA Research, selection can be made between two different regularization approaches (parameters are defined in the ''Image Settings dialog box''): | ||

* '''Tikhonov regularization''': In Tikhonov regularization, the term L V L<sup>T</sup> is inverted as (L V L<sup>T </sup>+λ I)<sup>-1</sup>. Here, l is the regularization constant, and I is the identity matrix. | * '''Tikhonov regularization''': In Tikhonov regularization, the term L V L<sup>T</sup> is inverted as (L V L<sup>T </sup>+λ I)<sup>-1</sup>. Here, l is the regularization constant, and I is the identity matrix. | ||

| − | * One way of determining the optimum regularization constant is by minimizing the ''generalized cross'' ''validation error'' (CVE). | + | ** One way of determining the optimum regularization constant is by minimizing the ''generalized cross'' ''validation error'' (CVE). |

| − | * Alternatively, the regularization constant can be specified manually as a percentage of the trace of the matrix L V L<sup>T</sup>. | + | ** Alternatively, the regularization constant can be specified manually as a percentage of the trace of the matrix L V L<sup>T</sup>. |